RSJ Tutorial on

Robot Audition Open Source Software HARK

Flyer Available!

Date and place

| Date & Time | 9: 00 – 13: 30, Oct. 5, 2018 (FrA21 & FrB21)

(detailed schedule below) |

| Place(room) | Room 4.R2 (Madrid Municipal Conference Centre, Madrid, SPAIN) |

The purpose of the tutorial

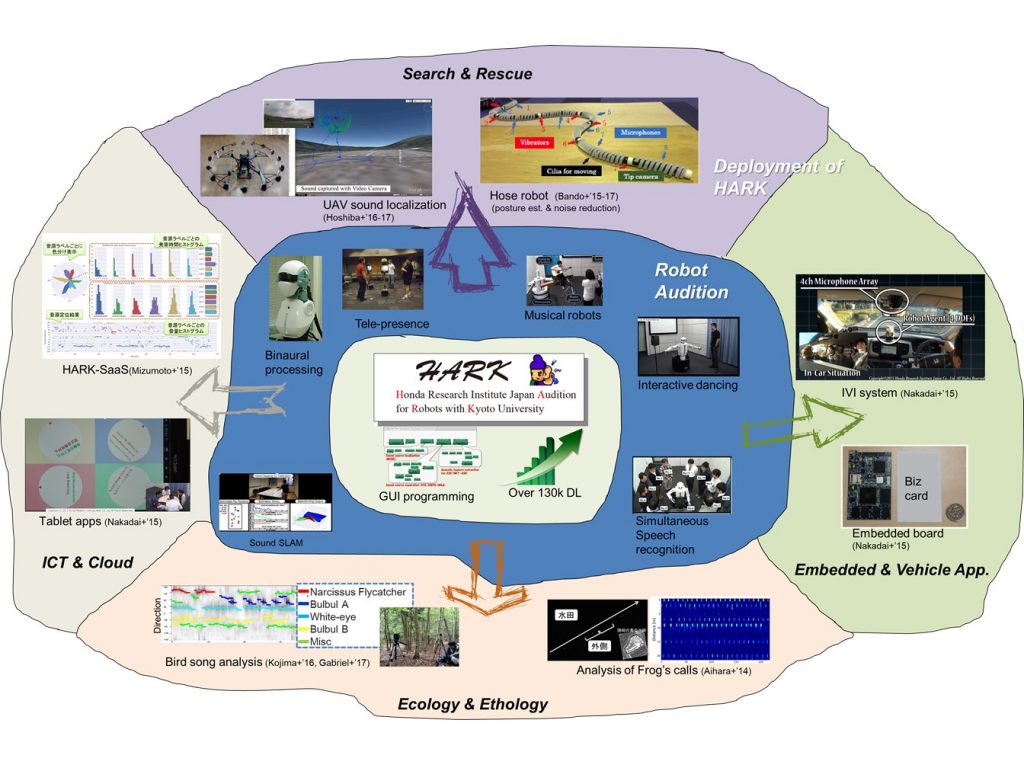

Auditory processing is essential for a robot to communicate with people. However, most studies in robotics used a head set microphone attached close to the mouth. Robot audition, thus, proposed to solve such a problem in 2000 and was registered as a keyword in IEEE Robotics and Automation Society (RAS) in 2014. We have developed a lot of robots to solve such as ambient noise, speech noise, ego-noise, reverberation, and so on, and published papers mainly in robotics conferences such as IROS, ICRA, and Humanoids. The methods in those publications are collected as open source software called HARK (HRI-JP Audition for Robots with Kyoto University) [some demos]. HARK was released in 2008 aiming to become an audio-equivalent to OpenCV, and the total number of downloads has exceeded 12,000 as of December 2017. HARK has been applied to various fields such as human-robot verbal interaction, drones, ICT and IVI systems, ecological and ethological research areas, etc. This tutorial will consists of lectures, practices, introduction of case studies, and live demonstrations, and thus it will provide participants with its basic concepts as well as practical knowledge on HARK. We are happy if this tutorial will be a good opportunity to lower the barrier for researcher of robot audition, to increase the awareness of HARK, and to expand to more applications of HARK into various fields to realize its full potential.

Note that this tutorial is sponsored by the Robotics Society of Japan (RSJ), a sponsor of IROS. Thus, this is positioned as an extended version of the RSJ tutorial which is normally held during the IROS main conference (see RSJ Tutorial 2017, and RSJ Tutorial 2016 ).

HARK and Its Deployment

Who should attend this tutorial, and what are the benefits?

You should participate in this tutorial if you are one of the following;

- Researchers who are interested in and/or are working on robot audition and its related research areas

- People who want to implement robot audition technologies in their robots (HARK has a seamless interface with ROS for this.)

- People who want to learn robot audition technologies

- People who want to contribute to robot audition open source software

- People who want to use HARK in their own research areas besides robot audition

This tutorial will include the following topics, and you will gain such knowledge on HARK, and obtain skills to use HARK.

- Overview of robot audition technologies

- Introduction to robot audition

- Fundamentals in sound source localization

- Fundamentals in sound source separation

- Fundamentals in automatic speech recognition

- Practice using HARK (PC and microphone array (provided) are needed)

- Sound source localization

- Sound source separation

- Integration with automatic speech recognition

- Integration with ROS

- Introduction of case studies with HARK

- Live demonstration of robot audition application

Program

This is a half-day tutorial consisting of two lectures, four practical lessons, introduction of two case studies and two live demonstration. Attendees will learn what robot audition and HARK are, how to use HARK, and how it works with a live demonstration. We will also organize a special session on robot audition in the main conference for those who wish to learn a more technical overview on HARK and robot audition. For more information please visit special session website.

| Time | Contents | Presenters |

| 9: 00 – 9: 05 | Opening remarks | Prof. Minoru Asada, Osaka University, Japan / Vice-President of RSJ |

| 9: 05 – 9: 15 | Lecture 1: Introduction | Prof. Hiroshi G. Okuno, Waseda University, Japan |

| 9: 15 – 9: 45 | Lecture 2: Overview of HARK | Prof. Kazuhiro Nakadai, Honda Research Institute of Japan / Tokyo Institute of Technology, Japan |

| 9: 45 – 10: 15 | Practice 0: Preparation of Your Laptop | Dr. Kotaro Hoshiba, Kanagawa University, Japan |

| 10: 15 – 10: 45 | Practice 1: Sound Sources Localization | Dr. Ryosuke Kojima, Kyoto University, Japan |

| 10: 45 – 11: 00 | Practice 2-1: Sound Source Separation and Automatic Speech Recognition | Dr. Katsutoshi Itoyama, Tokyo Institute of Technology, Japan |

| 11: 00 – 11: 30 | coffee break | |

| 11: 30 -11: 55 | Practice 2-2: Sound Source Separation and Automatic Speech Recognition | Dr. Katsutoshi Itoyama, Tokyo Institute of Technology, Japan |

| 11: 55 – 12: 25 | Practice 3: Integration with ROS | Dr. Osamu Sugiyama, Kyoto University, Japan |

| 12: 25 – 12: 40 | Case Study 1: Drone Audition | Prof. Makoto Kumon, Kumamoto University, Japan |

| 12: 40 – 12: 55 | Case Study 2: Bird Song Analysis | Prof. Reiji Suzuki, Nagoya University, Japan |

| 12: 55 – 13: 25 | Live Demos:

1. Sound Source Localization for Drones 2. Speech Enhancement for Active Scope Robots |

Dr, Kotaro Hoshiba

Dr. Katsutoshi Itoyama |

| 13: 25 – 13: 30 | Closing remark | Prof. Hiroshi G. Okuno |

Equipment to bring

Each participant should bring the following items to attend this tutorial: A microphone array. We will provide a microphone array (Tamago) to each participant. We will only have 50 microphone arrays available.

Organizers

Kazuhiro Nakadai

Principal Researcher, Honda Research Institute Japan Co., Ltd. /

Specially-Appointed Professor, Tokyo Institute of Technology

Hiroshi G. Okuno

Professor, Waseda University

Makoto Kumon

Associate Professor, Kumamoto University

Reiji Suzuki

Associate Professor, Nagoya University

Osamu Sugiyama

Lecturer, Kyoto University

Katsutoshi Itoyama

Specially-Appointed Lecturer, Tokyo Institute of Technology

Gokhan Ince

Assistant Professor, Istanbul Technical University

Ryosuke Kojima

Assistant Professor, Kyoto University

Kotaro Hoshiba

Assistant Professor, Kanagawa University

Please note that the organizers have held 14 HARK full-day tutorials in Japan and abroad including Humanoids-2009.