IJCAI-PRICAI 2020 Tutorial on

Robot Audition Open Source Software HARK

Date and place

| Date & Time |

January 8, 2021 18:40-22:00 (JST) [9:40-13:00 UTC]

(detailed schedule below) |

| Place(room) |

Online tutorial |

IJCAI-PRICAI 2020 tutorial on HARK was done.

The youtube videos on HARK TV are available here.

If you register for this tutorial (T46) when you register for IJCAI-PRICAI 2020, the registration chair will send you an email containing an invitation to the SLACK space for this tutorial. Because any information about this tutorial will be announced at #general in the SLACK space, please create a SLACK account and log-in the space according to the SLACK invitation you received. If you do not receive an email containing a SLACK invitation, please let us know (hark18-reg _at_ hark.jp).

What is this tutorial about?

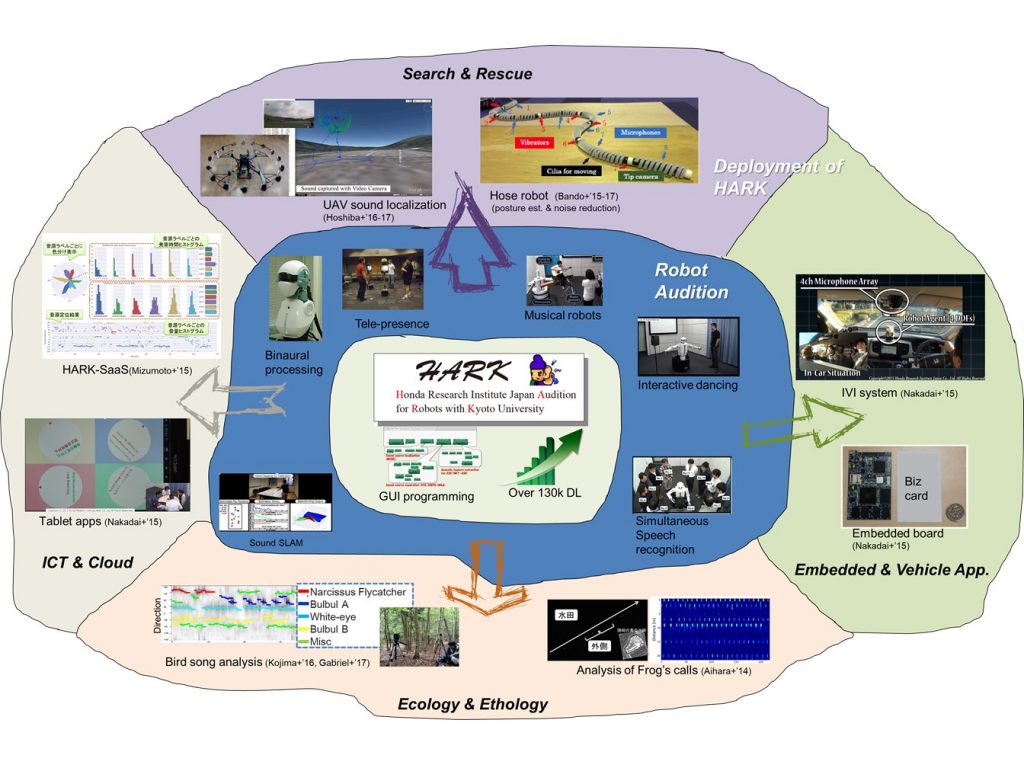

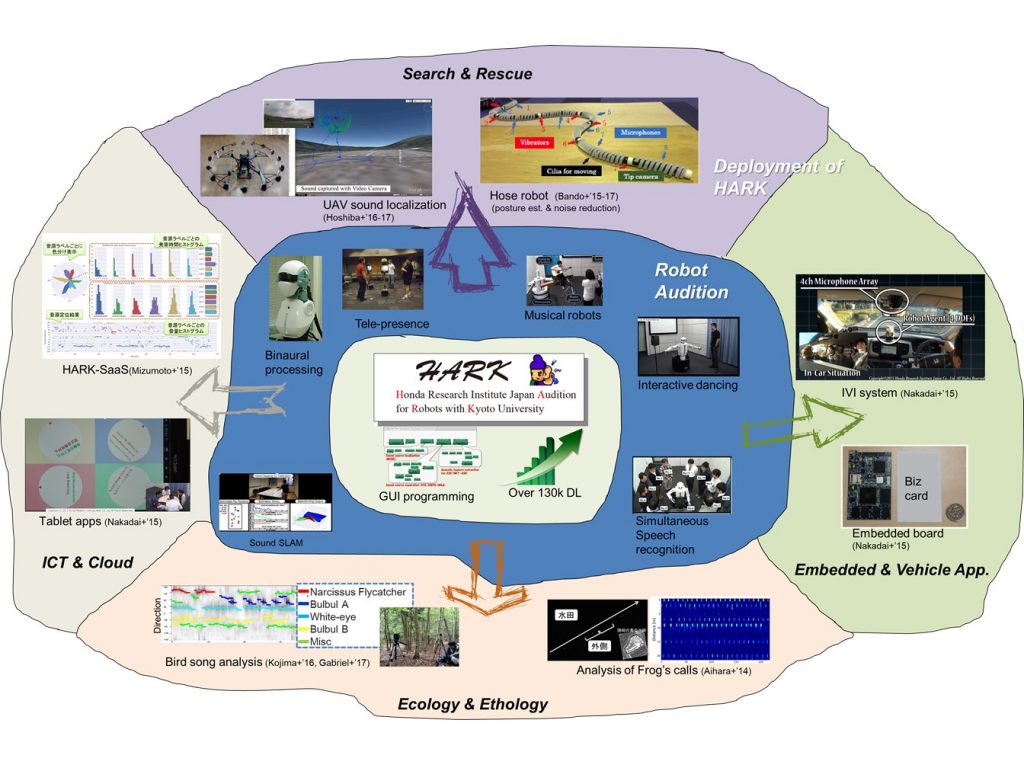

This is a tutorial for robot audition open source software HARK (HRI-JP Audition for Robots with Kyoto University). HARK was released in 2008 as a collection of robot audition functions such as sound source localization, sound source separation, and automatic speech recognition to be applied to real-world applications including robots, aiming at the audio equivalent to OpenCV. The total number of downloads has exceeded 16,000 as of December 2019.

The tutorial will consist of two lectures, four hands-on lessons, two case study reports, and two live demonstrations. Attendees will learn all about HARK, that is, what robot audition and HARK are, how to use HARK, and how it works with live demonstrations. For hands-on lessons, we ask for every participant to bring their own laptop PC (CPU: Core i5/i7 series, Memory: > 4GB, with headphone jack) with one USB 3. 0 port for a bootable USB device and another USB port to connect to a microphone array. We will provide a Ubuntu-bootable USB device and lend a microphone array device to each participant. Also, we recommend having headphones to practice listening to sounds. In the tutorial, student teaching assistants will help participants with any questions at any time.

Background of the tutorial

Computational Auditory Scene Analysis (CASA) has been studied in Artificial Intelligence for many years. CASA workshops have been organized in 1995, 1997, and 1999. In AAAI 2000, as an extension of CASA to real-world applications, robot audition was proposed. At that time, robotics started growing rapidly, but most studies in robotics used a headset microphone attached close to the mouth. Robot audition aims at solving such a problem, and constructing functions enabling a robot to listen to sounds with its own ears. It has been evolving both in artificial intelligence and robotics for the last 20 years, and sound source localization, separation and automatic speech recognition have been developed as highly noise-robust functions to cope with various noises such as ambient noise, speech noise, ego-noise, and reverberation with many publications at top conferences in artificial intelligence, robotics, and signal processing; IJCAI, AAAI, PRICAI, IROS, ICRA, ICASSP, INTERSPEECH, etc.

In 2008, the methods in those publications were collected as open source software called HARK (HRI-JP Audition for Robots with Kyoto University), aiming at the audio-equivalent to OpenCV. The total number of downloads has exceeded 16,000 as of December 2019. Although we have held 17 HARK full-day tutorials in Japan and abroad including IEEE Humanoids-2009 and IROS 2018 supported by JSAI and other societies, we did not have any tutorials in conferences on artificial intelligence, and we believe that it is time to have a tutorial in IJCAI in conjunction with IJCAI’s holding in Japan.

Although HARK provides various auditory functions which are useful to cope with real-world problems, it looks like the number of people who know about HARK are limited to the field of artificial intelligence. In reality, combinations of HARK, deep learning techniques, and end-to-end robot audition techniques have been reported, with some of them being used for social implementation. Once people in the field of artificial intelligence know HARK and related techniques, it will be beneficial for them. Therefore, we will organize this tutorial from the following viewpoints to maximize IJCAI participants’ benefits:

- To increase the awareness of HARK

- To increase users’ overall ability when using HARK

- To lower the barrier to introduce robot audition techniques

- To expand applications of HARK into AI fields to realize its full potential

HARK and Its Deployment

Outline of the tutorial

The following is a list of topics (keywords) addressed in the tutorial.

- Overview of robot audition technologies

- Introduction to robot audition

- Basics in sound source localization

- Basics in sound source separation

- Basics in automatic speech recognition

- Practice using HARK (PC and microphone array(provided) are necessary)

- Sound source localization

- Sound source separation

- Integration with automatic speech recognition

- Integration with ROS

- Reports of case studies with HARK

- Drone audition for rescue and search

- Bird song analysis for ornithology to analyze birds’ behaviors and communication

- Live demonstrations of robot audition applications

- Sound source localization for drone audition

- Speech enhancement for active scope robots

The detailed schedule for the above items is described in the previous section.

Program

This is a half-day (2-slot) tutorial consisting of two lectures, four hands-on lessons, introduction of two case studies and two live demonstrations. Attendees will learn what robot audition and HARK are, how to use HARK, and how it works with a live demonstration.

| Slot 1 (1:35) |

Contents |

Presenters |

| 18:40-18:50

(10 min) |

Opening remark & Lecture 1: Introduction |

Prof. Hiroshi G. Okuno, Waseda University, Japan |

| 18:50-19:20

(30 min) |

Lecture 2: Overview of HARK |

Prof. Kazuhiro Nakadai, Honda Research Institute of Japan / Tokyo Institute of Technology, Japan |

| 19:20-19:40

(20 min) |

Practice 0: Preparation of Your Laptop |

Taiki Yamada, Tokyo Institute of Technology, Japan |

| 19:40-20:15

(35 min) |

Practice 1: Sound Source Localization |

Dr. Ryosuke Kojima, Kyoto University, Japan |

Slot 2

(1:40) |

Contents |

Presenters |

| 20:20-20:40

(20 min) |

Practice 2: Sound Source Separation & ASR |

Dr. Kotaro Hoshiba, Kanagawa University, Japan |

| 20:40-21:15

(35 min) |

Practice 3: Integration with ROS and MQTT |

Dr. Katsutoshi Itoyama, Tokyo Institute of Technology, Japan |

| 21:15-21:35

(20 min) |

Case Study 1: Drone Audition |

Prof. Makoto Kumon, Kumamoto University, Japan |

| 21:35-21:55

(20 min) |

Case Study 2: Bird Song Analysis |

Prof. Reiji Suzuki, Nagoya University, Japan |

| 21:55-22:00

(5 min) |

Closing Remarks |

Prof. Hiroshi G. Okuno, Waseda University, Japan |

Target Audience

Robot audition bridges artificial intelligence and robotics. From the viewpoint of artificial intelligence, robot audition will be an interesting topic to provide auditory functions to deal with real-world problems. Also many people related to artificial intelligence are interested in multidisciplinary research topics to learn about real-world problems and applications in other fields. Robot audition is a suitable topic for this purpose. The following people are potential target audiences:

- People who are interested in and/or are working on robot audition and its related research area/problems

- People who want to introduce robot audition technologies to their robots (it is easy because HARK has a seamless interface with ROS) and related applications

- People who want to learn robot audition technologies

- People who want to contribute to robot audition open source software

- People who want to use HARK in their own research areas besides robot audition

Since the tutorial includes not only hands-on lessons, but lectures to learn basics in robot audition. People do not need any special knowledge for robot audition in advance. However, it includes hands-on lessons, and we therefore recommend that participants should know basic operations for Ubuntu (Linux) OS. During the tutorial, we will prepare an adequate number of student teaching assistants, and thus each participant can ask any questions at any time.

Equipment to prepare

Each participant should prepare the following items to attend this tutorial:

- A laptop computer. Requirements are as follows:

- CPU: Core i5 / i7 series

- 4 GB of RAM

- VMware/Virtualbox can work properly (installed in advance)

- Need >50GB storage for VM

- Device to listen to sound such as headphone or loudspeaker

Organizers

Kazuhiro Nakadai

Principal Researcher, Honda Research Institute Japan Co., Ltd. /

Specially-Appointed Professor, Tokyo Institute of Technology

bio

- Background in the tutorial area, including a list of publications/presentation:

A proposer of robot audition. Binaural and microphone array based robot audition, drone audition, bird song analysis. One of the main developers of robot audition open source software HARK.

- Kazuhiro Nakadai, Tino Lourens, Hiroshi G. Okuno, and Hiroaki Kitano. “Active Audition for Humanoid”, In Proceedings of AAAI-2000, pp. 832–839, AAAI, Jul. 2000.

- Kazuhiro Nakadai, Ken-ichi Hidai, Hiroshi Mizoguchi, Hiroshi G. Okuno, and Hiroaki Kitano. “Real-Time Auditory and Visual Multiple-Object Tracking for Robots”, In Proceedings of International Joint Conference on Artificial Intelligence (IJCAI-2001), pp. 1425–1432, IJCAI, 2001.

- Kazuhiro Nakadai, Toru Takahasi, Hiroshi G. Okuno, Hirofumi Nakajima, Yuji Hasegawa, Hiroshi Tsujino. “Design and Implementation of Robot Audition System HARK”, Advanced Robotics, Vol. 24 (2010) 739-761, VSP and RSJ.

- Kazuhiro NAKADAI, Hiroshi G. OKUNO, Takeshi MIZUMOTO, “Development, Deployment and Applications of Robot Audition Open Source Software HARK”, Fuji Technology Press Ltd., Feb. 2017, Journal of Robotics and Mechatronics, 16-25, Feb. 2017,

- Citation to an available example of work in the area:

Google Scholar Citations: 6647, Google Scholar h-index:43

- Evidence of teaching experience:

Teaching “Introduction to Robot Audition” with some other lectures at Tokyo Institute of Technology. Giving a lecture at all HARK tutorials.

- Evidence of scholarship in AI or Computer Science:

Received no scholarship. As an AI-related award in 2020, received Amity Research Award for significant contribution in the field of Artificial Intelligence from Amity University, India.

Hiroshi G. Okuno

Professor, Waseda University

bio

- Background in the tutorial area, including a list of publications/presentation:

A proposer of robot audition. Binaural and microphone array based robot audition, drone audition, bird song analysis. Experience in leading several national and international academic projects. Two main papers related to the tutorial:

- Kazuhiro Nakadai, Toru Takahashi, Hiroshi G. Okuno, Hirofumi Nakajima, Yuji Hasegawa, Hiroshi Tsujino. “Design and Implementation of Robot Audition System “HARK”, – Open Source Software for Listening to Three Simultaneous Speakers”, Advanced Robotics, Vol.24, Issue 5-6 (May 2010) pp.739-761. doi:10.1163/016918610X493561

- Hiroshi G. Okuno, Kazuhiro Nakadai: ROBOT AUDITION: ITS RISE AND PERSPECTIVES, Proceedings of 2015 International Conference on Acoustics, Speech and Signal Processing (ICASSP 2015), pp.5610-5614, April, 2015. doi:10.1109/ICASSP.2015.7179045

- Citation to an available example of work in the area:

Google Scholar Citations: 10723, Google Scholar h-index:50, Scopus h-index:31, Web of Science h-index: 22. International tutorials on HARK: four times. Keynote and special invited talks on Robot Audition including at IEA/AIE-2016, IEEE SME-2018, and IEEE Confluence-2019.

- Evidence of teaching experience:

Teaching experience of “Introduction to Robot Audition”: For more than 10 years at Graduate School of Informatics, Kyoto University; for 6 years at Graduate Program for Embodiment Informatics, Waseda University.

- Evidence of scholarship in AI or Computer Science:

Fellow of IEEE, JSAI, IPSJ, and RSJ for contribution to robot audition.

Makoto Kumon

Associate Professor, Kumamoto University

bio

- Background in the tutorial area, including a list of publications/presentation:

Binaural robot audition, drone audition.

- Mizuho WAKABAYASHI, Hiroshi. G. OKUNO and Makoto KUMON, “Multiple Sound Source Position Estimation by Drone Audition Based on Data Association Between Sound Source Localization and Identification,” in IEEE Robotics and Automation Letters, vol. 5, no. 2, pp. 782-789, April 2020. doi: 10.1109/LRA.2020.2965417

- Kai WASHIZAKI, Mizuho WAKABAYASHI and Makoto KUMON, “Position Estimation of Sound Source on Ground by Multirotor Helicopter with Microphone Array,” Proceedings of 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, pp. 1980-1985, Oct., 2016

- Takahiro ISHIKI and Makoto KUMON, “Design Model of Microphone Arrays for Multirotor Helicopters,” Proceedings of International Symposium on Intelligent Robots and Systems, Hamburg, pp.6143-6148, Oct. 2015

- Citation to an available example of work in the area:

Google Scholar Citations: 636, Google Scholar h-index:12

- Evidence of teaching experience:

Teaching “Fundamentals of Information Processing”, “Intelligent Mechanical Systems” and “Physics and Chemistry” at Kumamoto University.

Reiji Suzuki

Associate Professor, Nagoya University

bio

- Background in the tutorial area, including a list of publications/presentation:

Bird song analysis, A developer of HARKBird.

- Shinji Sumitani, Reiji Suzuki, Shiho Matsubayashi, Takaya Arita, Kazuhiro Nakadai, Hiroshi G. Okuno, “Fine-scale observations of spatio-spectro-temporal dynamics of bird vocalizations using robot audition techniques”, Remote Sensing in Ecology and Conservation (accepted).

- Shinji Sumitani, Reiji Suzuki, Naoaki Chiba, Shiho Matsubayashi, Takaya Arita, Kazuhiro Nakadai, Hiroshi G. Okuno, “An Integrated Framework for Field Recording, Localization, Classification and Annotation of Birdsongs Using Robot Audition Techniques — HARKBird 2.0”, Proc. of 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP2019), pp. 8246-8250 (2019/05/17).

- Reiji Suzuki, Shinji Sumitani, Shiho Matsubayashi, Takaya Arita, Kazuhiro Nakadai, Hiroshi G. Okuno, “Field observations of ecoacoustic dynamics of a Japanese bush warbler using an open-source software for robot audition HARK”, Journal of Ecoacoustics, 2: #EYAJ46 (11 pages) (2018/06).

- Reiji Suzuki, Shiho Matsubayashi, Fumiyuki Saito, Tatsuyoshi Murate, Tomohisa Masuda, Koichi Yamamoto, Ryosuke Kojima, Kazuhiro Nakadai, Hiroshi G. Okuno, “A Spatiotemporal Analysis of Acoustic Interactions between Great Reed Warblers (Acrocephalus arundinaceus) Using Microphone Arrays and Robot Audition Software HARK”, Ecology and Evolution, 8(1): 812-825 (2018/01).

- Citation to an available example of work in the area:

Google Scholar Citations: 641, Google Scholar h-index:12

- Evidence of teaching experience:

Teaching experience of “Complex systems programing” at Graduate School of Information Science/Informatics in Nagoya University, Japan. “Creative networking”, “Programing 1”, “Computational informatics 8”, etc. at School of Informatics in Nagoya University, Japan.

- Evidence of scholarship in AI or Computer Science:

JSPS International Program for Excellent Young Researcher Overseas Visits (2010-2011) (Visiting scholar in UCLA, USA)

Katsutoshi Itoyama

Specially-Appointed Associate Professor (Lecturer), Tokyo Institute of Technology

bio

- Background in the tutorial area, including a list of publications/presentation:

Speech enhancement, rescue and search robots, sound source separation.

- Kazuki Shimada, Yoshiaki Bando, Masato Mimura, Katsutoshi Itoyama, Kazuyoshi Yoshii, Tatsuya Kawahara, “Unsupervised Speech Enhancement Based on Multichannel NMF-Informed Beamforming for Noise-Robust Automatic Speech Recognition”, IEEE/ACM Transactions on Audio, Speech, and Language Processing, 27(5), 960-971, 2019. DOI: 10.1109/TASLP.2019.2907015

- Masashi Konyo, Yuichi Ambe, Hikaru Nagano, Yu Yamauchi, Satoshi Tadokoro, Yoshiaki Bando, Katsutoshi Itoyama, Hiroshi G Okuno, Takayuki Okatani, Kanta Shimizu, Eisuke Ito, “ImPACT-TRC thin serpentine robot platform for urban search and rescue”, Disaster Robotics, 25-76, Springer, 2019.

- Fumitoshi Matsuno, Tetsushi Kamegawa, Wei Qi, Tatsuya Takemori, Motoyasu Tanaka, Mizuki Nakajima, Kenjiro Tadakuma, Masahiro Fujita, Yosuke Suzuki, Katsutoshi Itoyama, Hiroshi G Okuno, Yoshiaki Bando, Tomofumi Fujiwara, Satoshi Tadokoro, “Development of Tough Snake Robot Systems”, Disaster Robotics, 267-326, Springer, 2019.

- Yoshiaki Bando, Hiroshi Saruwatari, Nobutaka Ono, Shoji Makino, Katsutoshi Itoyama, Daichi Kitamura, Masaru Ishimura, Moe Takakusaki, Narumi Mae, Kouei Yamaoka, Yutaro Matsui, Yuichi Ambe, Masashi Konyo, Satoshi Tadokoro, Kazuyoshi Yoshii, Hiroshi G Okuno, “Low latency and high quality two-stage human-voice-enhancement system for a hose-shaped rescue robot”, Journal of Robotics and Mechatronics, 29(1), 198-212, 2017. DOI: 10.20965/jrm.2017.p0198

- Kousuke Itakura, Yoshiaki Bando, Eita Nakamura, Katsutoshi Itoyama, Kazuyoshi Yoshii, Tatsuya Kawahara, “Bayesian multichannel audio source separation based on integrated source and spatial models”, IEEE/ACM Transactions on Audio, Speech, and Language Processing, 26(4), 831-846, 2018. DOI: 10.1109/TASLP.2017.2789320

- Citation to an available example of work in the area:

Google Scholar Citations: 870, Google Scholar h-index: 15

- Evidence of teaching experience:

Teaching experience of “Speech Processing Advanced” and “Computer Science Laboratory and Exercise 4” at Kyoto University, and “Research Opportunity A” at Tokyo Institute of Technology.

- Evidence of scholarship in AI or Computer Science:

JSPS Research Fellowship for Young Scientists (2008-2011)

Ryosuke Kojima

Assistant Professor, Kyoto University

bio

- Background in the tutorial area, including a list of publications/presentation:

Microphone array processing and its integration with machine learning.

- Ryosuke KOJIMA,Osamu SUGIYAMA,Kotaro HOSHIBA,Kazuhiro NAKADAI,Reiji SUZUKI,Charles E. TAYLOR, “Bird Song Scene Analysis Using a Spatial-Cue-Based Probabilistic Model”, Fuji Technology Press Ltd., Feb. 2017, Journal of Robotics and Mechatronics, 236-246, Feb. 2017

- Ryosuke KOJIMA,Osamu SUGIYAMA,Kotaro HOSHIBA,Reiji SUZUKI,Kazuhiro NAKADAI, “HARK-Bird-Box: A Portable Real-time Bird Song Scene Analysis System”, IEEE, Oct. 2018, Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2018), 2497-2502, Oct. 2018, IEEE, Madrid, Spain

- Ryosuke KOJIMA,Osamu SUGIYAMA,Kotaro HOSHIBA,Reiji SUZUKI,Kazuhiro NAKADAI, “A Spatial-Cue-Based Probabilistic Model for Bird Song Scene Analysis”, Proceedings of the 4th IEEE International Conference on Data Science and Advanced Analytics, 395-404, IEEE, Oct. 2017. doi:10.1109/DSAA.2017.34

- Citation to an available example of work in the area:

Google Scholar Citations: 150, Google Scholar h-index:8

- Evidence of teaching experience:

Teaching experience of “introduction of machine learning” in KD DHIEP Program(Kyoto University and Deloitte Data-Driven Healthcare Innovation Evangelist promotion Program) at Graduate School of medicine, Kyoto University.

- Evidence of scholarship in AI or Computer Science:

Research promotion award from the Robotics Society of Japan, 2015

Kotaro Hoshiba

Assistant Professor, Kanagawa University

bio

- Background in the tutorial area, including a list of publications/presentation:

Drone audition, acoustic signal processing.

- Kenzo NONAMI,Kotaro HOSHIBA,Kazuhiro NAKADAI,Makoto KUMON,Hiroshi G. OKUNO,Yasutada TANABE,Koichi YONEZAWA,Hiroshi TOKUTAKE,Satoshi SUZUKI,Kohei YAMAGUCHI,Shigeru SUNADA,Toshiyuki NAKATA,Ryusuke NODA,Hao LIU, “Recent R&D Technologies and Future Prospective of Flying Robot in Tough Robotics Challenge.”, Springer, Jan. 2019, Springer Tracts in Advanced Robotics, Disaster Robotics, 77-142, Jan. 2019

- Kotaro HOSHIBA,Kai WASHIZAKI,Mizuho WAKABAYASHI,Takahiro ISHIKI,Makoto KUMON,Yoshiaki BANDO,Daniel P. GABRIEL,Kazuhiro NAKADAI,Hiroshi G. OKUNO, “Design of UAV-Embedded Microphone Array System for Sound Source Localization in Outdoor Environments”, MDPI, Nov. 2017, Sensors, 1-16, Nov. 2017

- Citation to an available example of work in the area:

Google Scholar Citations: 114, Google Scholar h-index: 5

- Evidence of teaching experience:

Teaching experience of “Introduction to electrical, electronics and information”, “Experiments for electrical, electronics and information 1, 2” at Department of Electrical, Electronics and Information Engineering in Kanagawa University, Japan.

Please note that the organizers have held 17 HARK full-day tutorials in Japan and abroad including Humanoids-2009, IROS 2018.

Supports