| HARK Cookbook Version 2.0.0. (Revision: 6493) |

Run the offline localization in Localization directory. This script displays the sound source localization result of three 4ch audio file that inclues simultaneous speech of two speakers from -30 and 30 degrees (Multispeech.wav). You can find the file in data directory.

You can run the script by

> ./demo.sh offline

Then, you will see the output like Figure 14.6 in the terminal, and a graph of sound source localization.

UINodeRepository::Scan() Scanning def /usr/lib/flowdesigner/toolbox done loading def files loading XML document from memory done! Building network :MAIN TF was loaded by libharkio2. 1 heights, 72 directions, 1 ranges, 7 microphones, 512 points Source 0 is created. (skipped) Source 5 is removed. UINodeRepository::Scan() (skipped)

After you ran the script, you will find two text files: Localization.txt and log.txt. If you cannot find them, you failed the execution. Check the following things:

Check if MultiSpeech.wav is in the ../data directory. These files are real recorded sound by Kinect, which is supported by HARK. You cannot run the network since this is the input file.

Check if the kinect_loc.dat file is in the ../config directory. They are the transfer function file for localiztion. See LocalizeMUSIC for details.

Localization.txt contains sound source localization results generated by SaveSourceLocation in a text format. They contain the frame number and sound source direction for each frame. You can load them using LoadSourceLocation . See HARK document for the format of the file.

log.txt contains the MUSIC spectrum generated by LocalizeMUSIC with DEBUG property true. MUSIC spectrum means a kind of confidence of the sound existence, calculated for each time and direction. The sound exists if the MUSIC spectrum value is high. See LocalizeMUSIC in HARK document for details. We also provide the visualization program of MUSIC spectrum. Run the following commands:

> python plotMusicSpec.py log.txt

If you fail, your system may not have necessary python package. Run the following command:

For Ubuntu sudo apt-get install python python-matplotlib

This result can be used for tuning the THRESH property of SourceTracker .

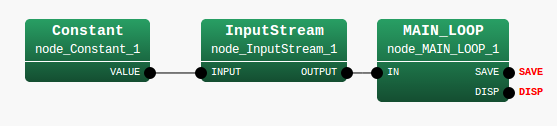

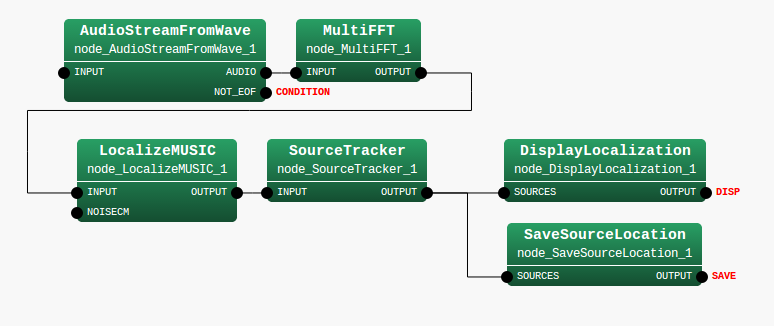

This sample has nine nodes. Three nodes are in MAIN (subnet) and six nodes are in MAIN_LOOP (iterator). MAIN (subnet) and MAIN_LOOP (iterator) are shown in Figures 14.7 and 14.8. AudioStreamFromWave loads the waveform, MultiFFT transforms it to spectrum, LocalizeMUSIC localizes the sound, SourceTracker tracks the localization result, then, DisplayLocalization shows them and SaveSourceLocation stores as a file.

Table 14.9 summarizes the main parameters. The most important parameter is A_MATRIX, which specifies a file name of transfer function for localization. If you use a microphone array we support, you can download them from HARK web page. However, if you want use your own microphone array, you need to make it by harktool.

Node name |

Parameter name |

Type |

Value |

MAIN_LOOP |

LENGTH |

512 |

|

ADVANCE |

160 |

||

SAMPLING_RATE |

16000 |

||

A_MATRIX |

ARG2 |

||

FILENAME |

ARG3 |

||

DOWHILE |

(empty) |

||

NUM_CHANNELS |

4 |

||

LENGTH |

LENGTH |

||

SAMPLING_RATE |

SAMPRING_RATE |

||

A_MATRIX |

A_MATRIX |

||

PERIOD |

50 |

||

NUM_SOURCE |

1 |

||

MIN_DEG |

-90 |

||

MAX_DEG |

90 |

||

LOWER_BOUND_FREQUENCY |

300 |

||

HIGHER_BOUND_FREQUENCY |

2700 |

||

DEBUG |

false |